AutoGen vs crewAI: Which framework should you choose?

So, you're wondering which framework to choose for your agentic app and you've come across AutoGen and CrewAI. Both are popular, but which one is more suitable for you?

What's up!

I'm going to keep this short so I won't bore you with definitions today, but instead, I'll focus on the differences and strengths of AutoGen and crewAI so that you can decide which one works for you.

In case you don’t know, both frameworks achieve similar results, but, there are key differences to consider, so keep reading to find out what they are, and how these differences could sway you one way or the other.

Watch on my YouTube Channel

Check out the video version of this tutorial with more information. You'll like it! Please consider supporting my new channel by subscribing and liking the video.

First, the similarities

Both frameworks:

- Are written in Python

- Are designed to create AI agents

- Support multi-agent conversations

- Can integrate with local LLMs

- Allow for human input during execution

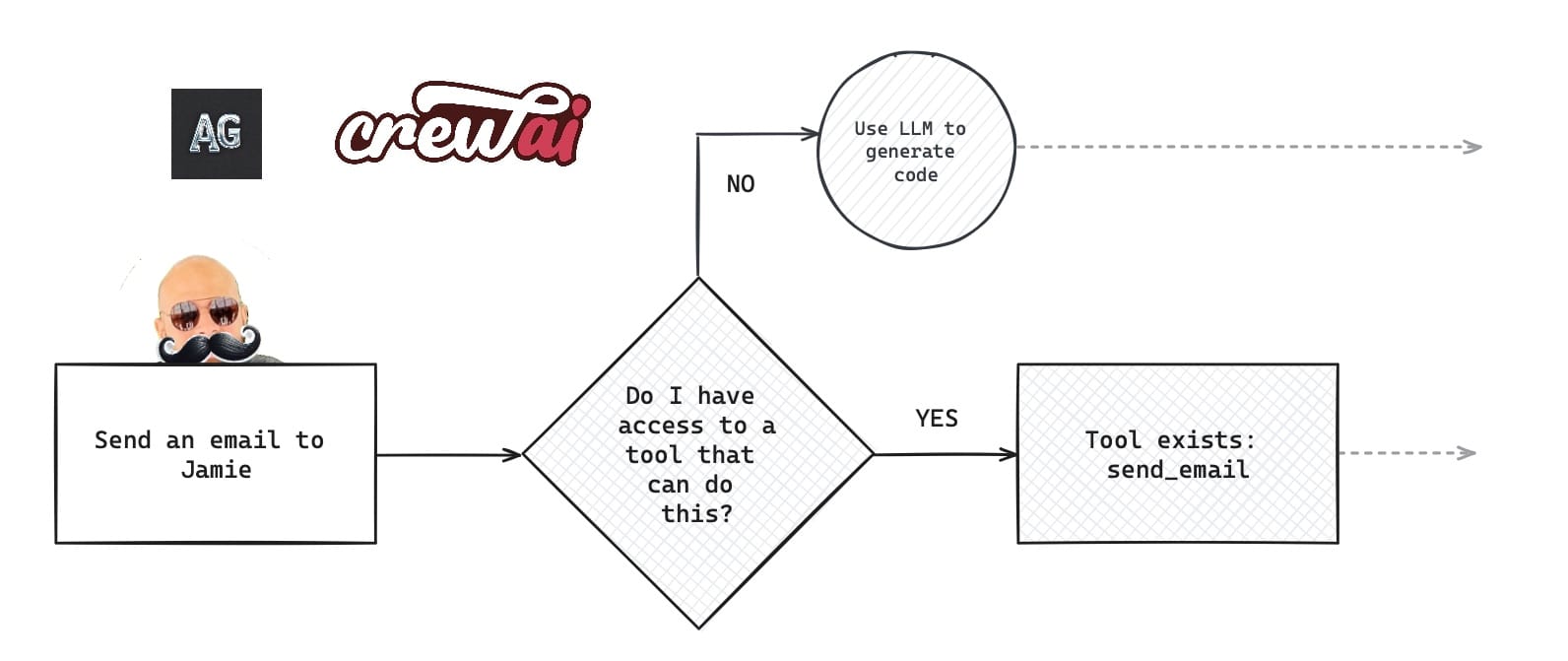

And finally, both frameworks use tools or functions to accomplish tasks:

Let's start with AutoGen (Cause it's older)

AutoGen, most likely a short version of Autogenous meaning "arising from within or from a thing itself." Microsoft released it on Oct. 2023 in collaboration with teams from Penn State University, and the University of Washington.

Here's how they describe it:

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

A top feature in AutoGen is the ability to execute code in a docker container. This guarantees the safety of your environment and isolates any unexpected issues, don't downplay this though, it's a serious concern when executing LLM-generated code.

How AutoGen agents communicate

The whole point of agents is to give them a problem and have them work together until they solve it, right? But how do agents communicate in AutoGen?

The following is a list of supported conversation patterns in AutoGen:

- Two-Agent Chat: This is the simplest pattern. It involves two agents, usually a

UserProxyAgentand anAssistantAgent. (Got a few minutes and want to learn more? Check my video explaining the two-agent workflow in AutoGen) - Sequential Chat: This pattern also involves two agents for each task, this is because sequential chat is like a chain of conversations where each new one starts with a summary of what happened in the previous one.

- Group Chat: This pattern involves a group of agents in a pool, however, they're not alone. A special "manager" agent called

GroupChatManagercoordinates when and which agent handles a message. The manager supports these types of strategies:- Auto

- Manual

- Random

- Round robin

- Nested Chat: Think of this as a "sub-conversation" or a separate process handling a specific issue within a problem, which then returns the summary of the sub-conversation to the initiator. For instance, when you order food on your app for delivery, there might be a chat between the restaurant and the driver picking up your order, you're not in on it, but you'll get the summary of this exchange that your order is done and on its way.

How AutoGen agents perform tasks

AutoGen agents use Tools to perform actions. While it's true that agents could use the help of an LLM to generate code, in most cases, especially if you know how to handle a specific task, like sending an email, you could provide the agents your own code aka tool.

Let's say you need one of your agents to send an email. The most obvious way is to register a send_email function and let your agent use it. It'll include your SMTP and other settings.

Up next: crewAI (The new kid on the block)

"crew"-AI refers to a crew (or a team) of AI agents. Released by João Moura on November 14, 2023, crewAI has gained popularity with over 12.8k stars on GitHub. It's often regarded as a simpler and more organized alternative to AutoGen, though this is a subjective comparison.

Here's the official product description:

Cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks.

Two notable crewAI features: The first, expected output, which is about specifying an output for a task (e.g., bullet-point list instead of paragraph), ensuring desired results; and the second, delegation, where agents hand off tasks to others when they're better suited to handle them.

How crewAI agents perform tasks

crewAI comes with built-in tools that enable agents to perform tasks. You can think of tools as skills or functions that agents use whenever they are faced with a problem that the tool can resolve.

For instance, if an agent needs to search the contents of a website, it could use the built-in WebsiteSearchTool.

If you're not happy with the built-in tools, crewAI lets you define your custom tools instead or you could choose from the many LangChain tools since crewAI is built on top of LangChain.

How crewAI agents communicate

There are currently two patterns in crewAI:

- Sequential: This pattern, ensures the execution of tasks in a linear order, where each step relies on the previous one. For example, scraping a webpage, summarizing its content, and then sending the summary via email.

- Hierarchical: This is a more advanced pattern where an LLM-powered agent acts as a "manager", delegating tasks, validating outputs, and orchestrating communication between agents. Think of it like a Group Chat in AutoGen.

Which AI tool is better - crewAI or AutoGen?

While both have their strengths, crewAI is unique in its modular design, task delegation between agents, and its native LangChain integration. On the other hand, AutoGen's containerized code execution support is a winner in terms of safety. Both tools integrate seamlessly with OpenAI as well as local LLMs.

My personal take: I found it slightly easier to start with crewAI, but I also felt that AutoGen excels at handling ambiguous requests.

So? Which one will YOU choose? Let me know in the comments because other readers will find your contribution valuable!

Further readings

More from Getting Started with AI

- VIDEO: Watch how AutoGen agents talk with each other

- VIDEO: An overview of AutoGen Studio for beginners

- Meet AutoGen Agents: What are they and how they work for you

- This is how AutoGen Studio 2.0 lets you build a team of AI Agents