What is the difference between Embedchain and LangChain?

Another day, another data framework... We can't catch a break! One of the latest kids on the block, Embedchain seems to be gaining popularity, so I took it for a spin and wrote this post to show you how it's different from one of the most popular data frameworks out there, LangChain.

Introduction

Last year was no doubt the year of AI, thanks to ChatGPT and the explosion of apps built around the OpenAI API. This prompted developers to extend the knowledge of LLMs with private information by connecting them to vector databases.

Because of this, RAG (or Retrieval augmented generation) was born.

I've been writing about frameworks like LangChain, LlamaIndex, and Semantic Kernel that let us build RAG apps easily and today is no different. We're going to talk about another framework, one that was built specifically for RAG.

Enter Embedchain!

Introduction to Embedchain

Embedchain is an open-source framework for Python that allows for the easy creation of ChatGPT-like bots over any dataset by implementing the RAG architecture. It enables the embedding of resources such as audio, video, text, and PDF with a single line of code, making it simple to create chatbots for various types of data.

The library handles the process of chunking, embedding, and storing content automatically. It also takes care of connecting the vectorized content to your choice of LLM.

Introduction to LangChain

LangChain is arguably the most widely used data framework out there. It is also an open-source framework available for Python and JavaScript that simplifies integrating LLM capabilities into your application. LangChain uses chains that represent tasks linked together to perform a goal.

The framework comes with built-in support for various data loaders that can retrieve, organize, and create embeddings for use with LLMs.

Embedchain example

There's no better way to understand how a library works than to write a simple app. Below, I am going to show you exactly how we can do this in three easy steps!

Step 1: Prepare your environment

Let's create our project folder, we'll call it embedchain-gswithai-example:

mkdir embedchain-gswithai-exampleLet's cd into the new directory and create our main .py file:

cd embedchain-gswithai-example

touch main.py

(Optional; but recommended) Create and activate our virtual environment:

python -m venv venv

source venv/bin/activate

Great, with the above setup, let's install the embedchain package using pip:

pip install embedchain

Step 2: Write the code

import os

os.environ["OPENAI_API_KEY"] = "YOUR_API_KEY"

from embedchain import App

app = App()

app.add("https://www.gettingstarted.ai/about/")

response = app.query("What is Getting Started with AI about?")

print(response)

YOUR_API_KEY with your OpenAI API Key.That's it! Seriously. Behind the scenes, Embedchain retrieves the content from the provided URL and then proceeds to split, vectorize, and store the data in a Chroma database. Then, it performs a similarity search based on your query and passes the information to the LLM which then responds accordingly.

Step 3: Run the code!

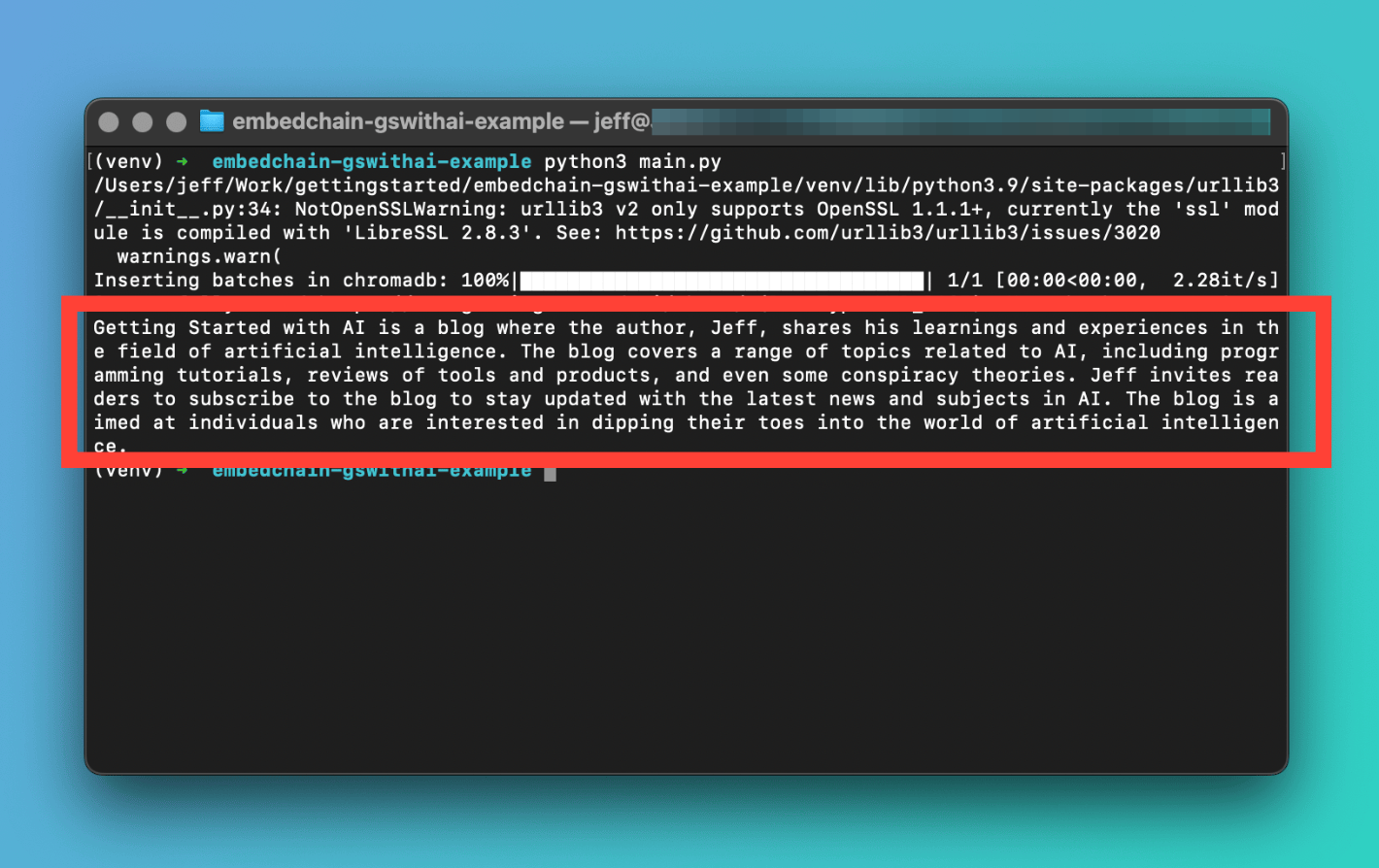

Okay, let's run the code above to make sure all works as expected. In your terminal window type python3 main.py and hit return.

Aaaaaaand voila!

Getting Started with AI is a blog where the author, Jeff, shares his learnings and experiences in the field of artificial intelligence. The blog covers a range of topics related to AI, including programming tutorials, reviews of tools and products, and even some conspiracy theories. Jeff invites readers to subscribe to the blog to stay updated with the latest news and subjects in AI. The blog is aimed at individuals who are interested in dipping their toes into the world of artificial intelligence.

Here's a screenshot from my terminal:

main.py in the terminalNow there's an obvious tradeoff when using such a high-level framework. Can you guess what it is? Let me know in the comments below!

The big question

So, what's the difference between LangChain and Embedchain?

Embedchain and LangChain are both tools that simplify building RAG apps, however, they serve different purposes. LangChain is a broader framework in terms of capabilities and features and provides more in-depth control and customization options.

On the other hand, Embedchain is a framework that makes creating ChatGPT-like bots over any dataset as simple as writing a few lines of Python code. It's worth mentioning that Embedchain is a wrapper on top of LangChain, meaning more abstraction and less control.

My opinion: If you're looking to add simple ChatGPT-style functionality to your app quickly, consider Embedchain, otherwise it's best you stick with LangChain.

Conclusion

Out of all the frameworks and tools that I've tested Embedchain wins hands down in terms of lines of code needed to build a RAG app. However, things get trickier and more complicated when you want to customize the default behavior.

Given its simplicity, you should consider Embedchain if you want the fastest route to production. There is more to the framework than what I've covered in this post, so make sure you go through the official docs.

LangChain is widely adopted and lets you build a ChatGPT-style bot as well, but you'll need to add all the parts together to make it work, which may or may not be what you need to do given your use case.

I'd love to know what you're working on and if you have any specific questions about this post. Please leave a comment below and join me on X for more updates.

Further readings

More from Getting Started with AI

- Getting started with LangChain? Here's everything you need to know

- Using Chroma and LangChain for storing embeddings

- From traditional SQL to vector databases in the age of AI

- An introduction to RAG: Haystack, LangChain, and LlamaIndex

- What is the Difference Between LlamaIndex and LangChain