Add AI capabilities to your C# application using Semantic Kernel from Microsoft (Part 1)

In this tutorial, I am going to introduce you to a superhero. He specializes in finding and busting myths about AI and then shares them on social media. He's all about facts and we're going to build him using Microsoft's Semantic Kernel.

I love C#

I started writing C# after moving from VB.NET in 2004. It started as a hobby, but I soon found myself building many projects for different platforms using the programming language.

Fast forward 20 years and C# is better than ever. Microsoft made sure it's always a top choice for programmers.

Today, we're going to see how you (as a C# developer) swimming in a sea of Python and NodeJS documentation about building RAG and AI-powered apps, can integrate AI capabilities into your C# app.

Watch this tutorial on YouTube

Make sure you subscribe to the channel

So, what are we building today?

Almost a year ago, Microsoft released Semantic Kernel, an open-source SDK that supports C#, Python, and Java out of the box. Its purpose is to facilitate the integration of AI capabilities in your app.

Today, we're going to build a simple C# console application with AI superpowers. When I say superpowers, I mean it!

The superhero no one needs

He's here and he's awesome. Introducing Factman!

We're going to create a simple C# console app with the Semantic Kernel SDK. Then, we'll write some code to make GPT give us a common myth about AI. We'll take that myth, and bust it by fact-checking it. Finally, we'll simulate posting the busted myth on social media platforms like Twitter. (Does anyone call it X? 👀)

Yup, you guessed it! Our app is Factman. He's busting myths all day, every day. No one asked him to, but he's here anyway.

Introduction to Semantic Kernel

What is Semantic Kernel?

As per Microsoft's definition:

Semantic Kernel is an open-source SDK that lets you easily build agents that can call your existing code. As a highly extensible SDK, you can use Semantic Kernel with models from OpenAI, Azure OpenAI, Hugging Face, and more! By combining your existing C#, Python, and Java code with these models, you can build agents that answer questions and automate processes. - Microsoft

Semantic Kernel gives us the ability to easily combine AI services like OpenAI, Azure OpenAI, and Hugging Face and add AI capabilities into our C#, Java, and Python apps.

Why use Semantic Kernel?

The most obvious reason is native C# support. Semantic Kernel is an abstraction layer that simplifies the integration of AI components and functionalities in a single SDK.

This automatically makes the AI components within your app modular, extensible, and configurable.

Semantic Kernel building blocks

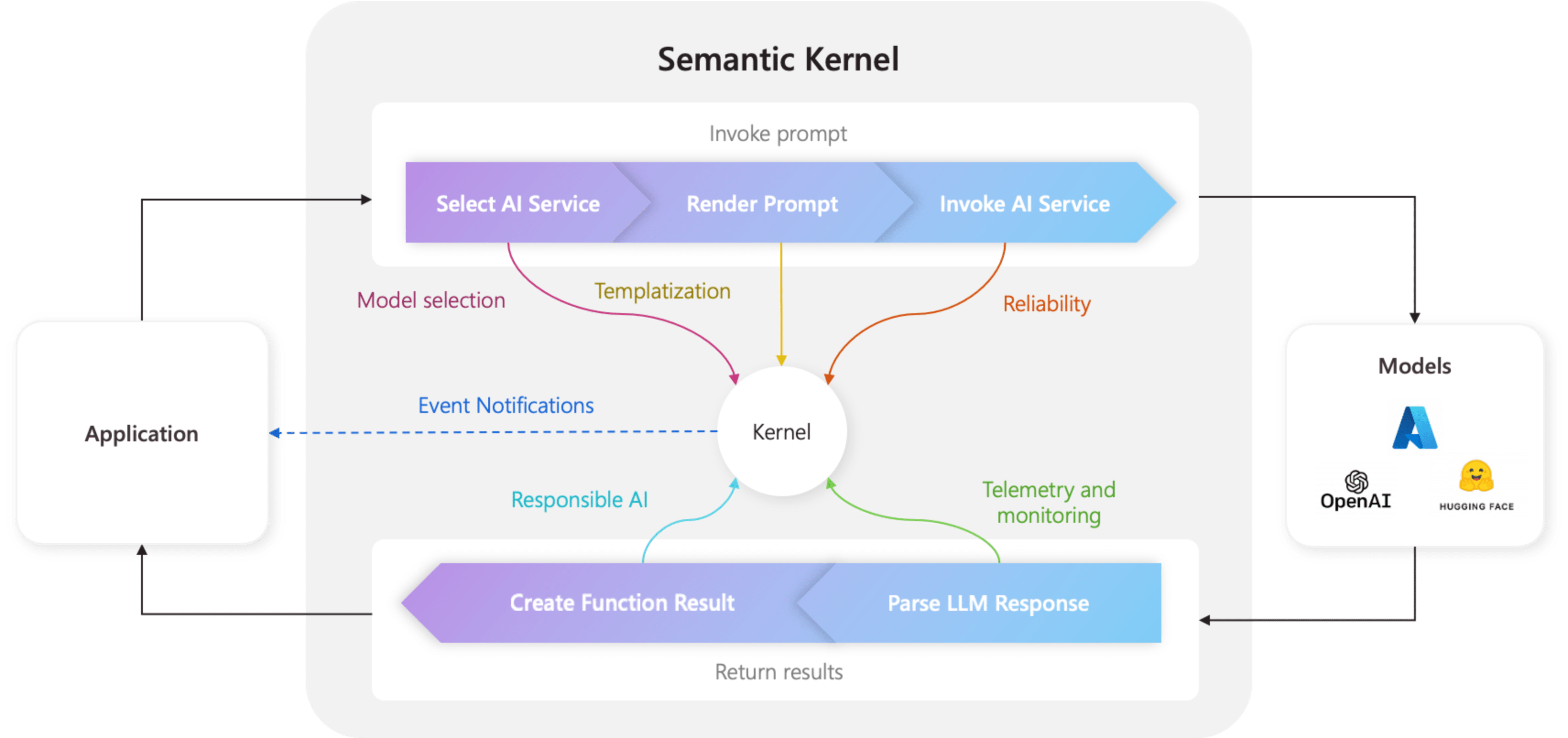

The Kernel in Semantic Kernel is the center that connects everything. Anything you do using the Semantic Kernel SDK will go through the Kernel. It is a centralized place to manage your AI app and serves as a great place for middleware such as logging and telemetry.

Here's a diagram showing the Kernel at the center of all of the operations between the Application and Models:

So far so good? Great. We'll need to go over a couple of important components that make up Semantic Kernel. I'm specifically talking about Plugins and Services.

Semantic Kernel: Plugins

A plugin is a collection of functions that can be used by the Kernel to serve a purpose or a task.

Plugins can be Prompts or Native Functions:

- Prompts: These take in user requests and by prompting the LLM using natural language a response can be generated. Microsoft describes Prompts as the ears and mouth of your AI app.

- We use two files to describe our prompt functions:

skprompt.txtandconfig.json.skprompt.txtcontains the text-based prompt that will be sent to the model.config.jsoncontains a description and the input parameters of thePromptfunction. It implements the OpenAI plugin specifications.

- Native Functions: Makes C# functions usable by the Kernel. They are used to extend the capabilities of your AI by executing code. Microsoft describes Native functions as the hands of your AI app.

Remember our superhero Factman? Well, we're going to build him as a Semantic Kernel Plugin, and to give him his superpowers, we'll implement the following Functions:

FindMyth: Get a common myth about AI.BustMyth: Bust a given myth in a funny way.AdaptMessage: Adapt the given input to a specified social media platform.Post: Publish a given input on a given social media platform.

For a complete overview, check out the official docs. Microsoft also added out-of-the-box plugins to standardize implementation, you can find these Core plugins here.

Semantic Kernel: Services

A Service refers to the AI capabilities that can be added to the kernel to perform tasks like text completion, chat completion, and text embedding generation.

It's easy to switch between Services to see which one performs better for your specific use case.

Take a look at the following code:

builder.Services.AddOpenAIChatCompletion(

"gpt-3.5-turbo",

"YOUR_API_KEY");AddOpenAIChatCompletion service example

This code adds the OpenAI chat completion service to the Kernel. Suppose that for your staging environment, you'd like to use a different service such as Azure OpenAI. You could re-write the above to become:

if (environment == "Staging")

{

builder.Services.AddOpenAIChatCompletion(

"gpt-3.5-turbo",

"YOUR_API_KEY");

}

else if (environment == "Production")

{

builder.Services.AddAzureOpenAITextGeneration(

//...

);

}Sample code to show conditional loading of Semantic Kernel Service into the Kernel

This way, the rest of your code will remain relatively the same and you will not need to refactor or change other components.

Semantic Kernel: Planners

A planner is a function that comes up with a step-by-step plan to accomplish a certain user request. It uses the Plugins attached to the Kernel to complete the task and it's able to decide the sequence and required parameters based on the user request.

I am not going to dive any deeper into Planners, but basically they work by sending the user request to a large language model which then responds with required steps to complete the user request. It then picks which Plugins to use to take action.

For more detailed information, you can check the official Microsoft docs.

In the next part...

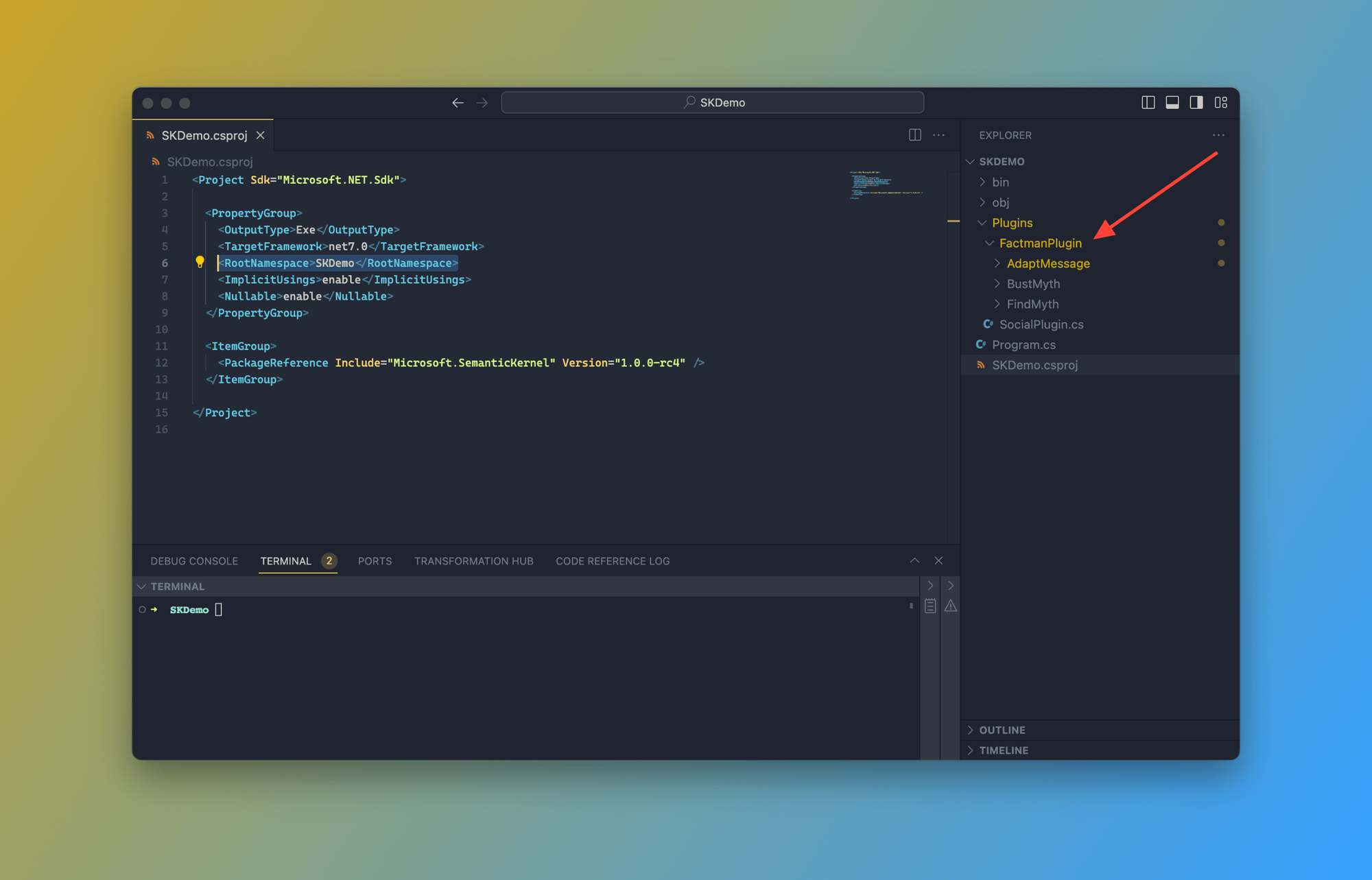

Next, we're going to code everything into a C# Console app called SKDemo so that we bring our superhero to life!

- We'll build the following functions

FindMythBustMythAdaptMessagePost

- We'll create two Plugins

FactmanPlugin: Responsible for all myth-related tasks.SocialPlugin: Responsible for posting the final message to a specified social media platform.

Here's what the project will look like:

Closing thoughts

Thanks for reading Part 1. So far, you know what Semantic Kernel is, what it does, and which components are needed to make it work.

In the next part, we'll dive deeper into the project structure and write all the necessary code to bring Factman to life.

👉 Click here to read the next part of this tutorial

Happy coding! 👩💻🦸♂️

FAQ: Frequently asked questions

How is Semantic Kernel different from AutoGen?

Semantic Kernel and AutoGen are two different things. AutoGen is a framework that enables collaboration between agents to complete a request, while Semantic Kernel is an SDK for creating agents that use large language models (LLMs) to perform tasks.

What is an AI Agent?

An agent is an AI program that performs a specific task. A customer service agent is an example of an AI agent capable of natural language understanding, information retrieval, problem-solving, and more.

What is the difference between Semantic Kernel and LlamaIndex?

Semantic Kernel is focused on orchestrating AI services using Plugins and out-of-the-box Planners, while LlamaIndex is a data framework for LLM applications, providing tools for creating RAG applications specifically excelling at data retrieval, indexing, and storage.

Check this page for LlamaIndex tutorials and more information.

What is the difference between Semantic Kernel and LangChain?

Semantic Kernel and LangChain can both be used to manage LLMs (Large Language Models) and AI services but are implemented differently. Semantic Kernel uses Plugins and Planners and is an open-source SDK for C# and Python. In contrast, LangChain uses Chains to perform AI capabilities and includes many ready-to-use features and plugins such as data ingestion, indexing, and retrieval capabilities.

Check this page for LangChain tutorials and more information.