Here's how to convert text to song with AI using Google's MusicLM

In this post, we're going to see how MusicLM allows us to convert text to a song. We're also going to look at the MusicLM download feature to store the generated songs and go over some thoughts for the future of music.

Introduction

Have you seen the latest Dark Mirror Season 6 Episode 1 titled "Joan is Awful" on Netflix? The episode reminded me of similar thoughts that I shared last month in this post. If you haven't seen the episode yet, I recommend you do. It is a beautiful visualization of how generative AI would one day be able to create content on-the-fly. Here's the description of the episode from IMDb:

"An average woman is stunned to discover a global streaming platform has launched a prestige TV drama adaptation of her life - in which she is portrayed by Hollywood A-lister Salma Hayek." - IMDb

In my previous post about thoughts on personalized exclusive content, I describe how AI might one day become capable of generating custom personalized content on a variety of platforms like streaming services such as Netflix. In this post however, we're going to see how MusicML will help music producers as well as services like Spotify to generate (or help with creating) songs on-the-fly.

This is where Google's latest beta product, MusicLM comes in. The model is capable of generating a song that includes a real sounding collection of instruments mashed up together nicely from a single descriptive text prompt, similar to how ChatGPT generates text (or answers) from a single prompt, MusicLM responds with a song instead of text.

The ChatGPT of Music and Songs

Recently, I was able to get my hands on the latest and greatest from Google, the beta project, MusicLM. We're going to go over what MusicLM is, how we can use text-to-song, as well as using MusicLM download feature to store the generated songs locally.

What is MusicLM

Briefly, it is a model that is able to generate music and songs from text inputs. Here is the full description from the MusicLM website:

We introduce MusicLM, a model generating high-fidelity music from text descriptions such as "a calming violin melody backed by a distorted guitar riff". MusicLM casts the process of conditional music generation as a hierarchical sequence-to-sequence modeling task, and it generates music at 24 kHz that remains consistent over several minutes. Our experiments show that MusicLM outperforms previous systems both in audio quality and adherence to the text description. Moreover, we demonstrate that MusicLM can be conditioned on both text and a melody in that it can transform whistled and hummed melodies according to the style described in a text caption. To support future research, we publicly release MusicCaps, a dataset composed of 5.5k music-text pairs, with rich text descriptions provided by human experts.

In a nutshell, MusicLM is a model that generates real sounding music, close to actual instruments put together so the output is very close to an actual song composed by a music producer. So far, it is the most accurate AI model in terms of translating the a text input into a song.

Join the Waitlist

Ok, so how can you get started with MusicLM? And which steps would you need to take to try the model for yourself?

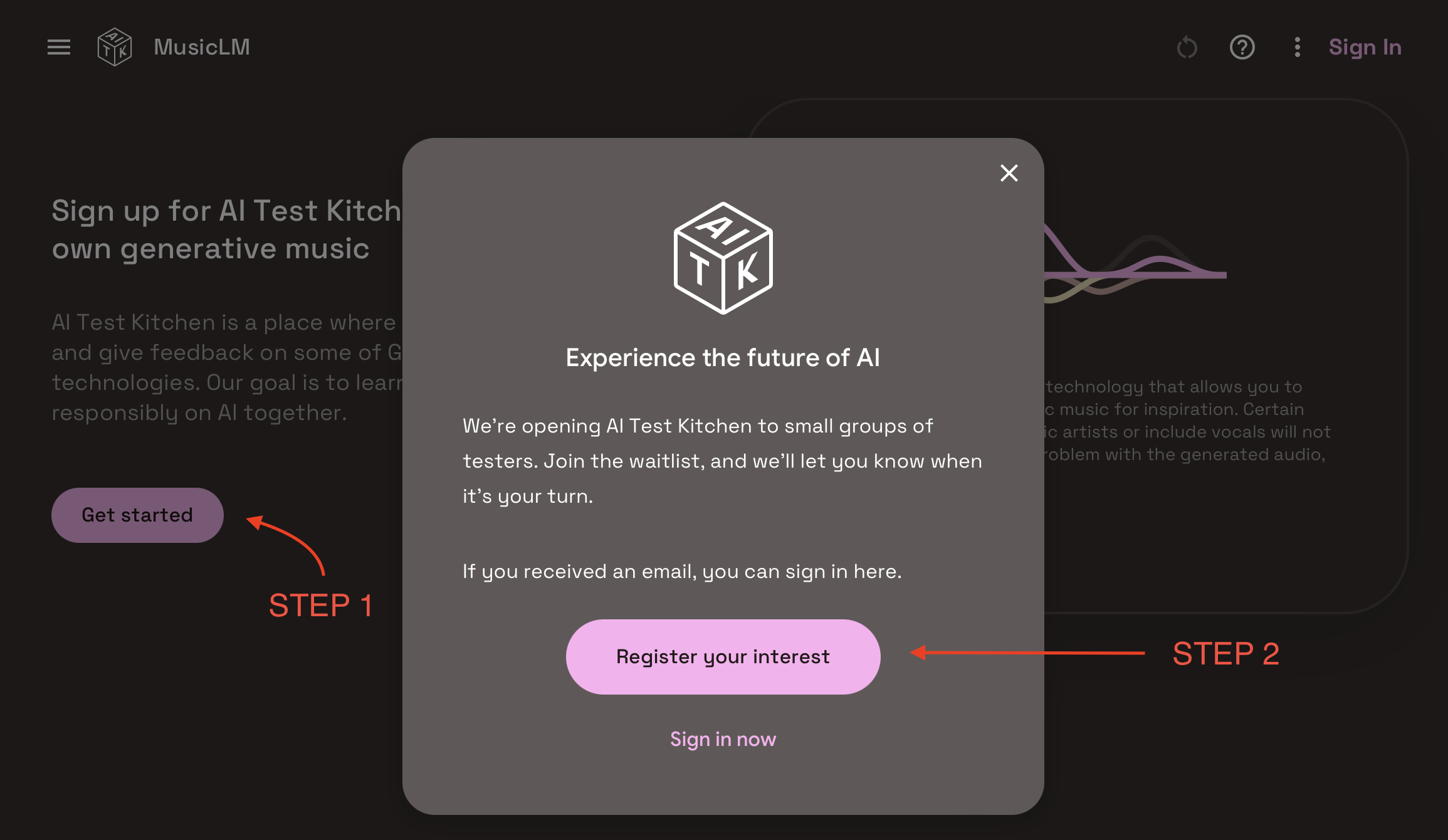

First, you'll need a Google account (I'm assuming you already have that), then:

- Go to the project's website (Right-click and open in a new tab)

- Click (or tap) on the "Get Started" button

- If you're not signed in, you can sign in and then enroll in the waitlist by clicking on "Register your interest" (As shown below)

After you register your interest, you will receive an email notifying you that you've been admitted to the testers group and you're able to try out MusicLM.

Giving it a Spin

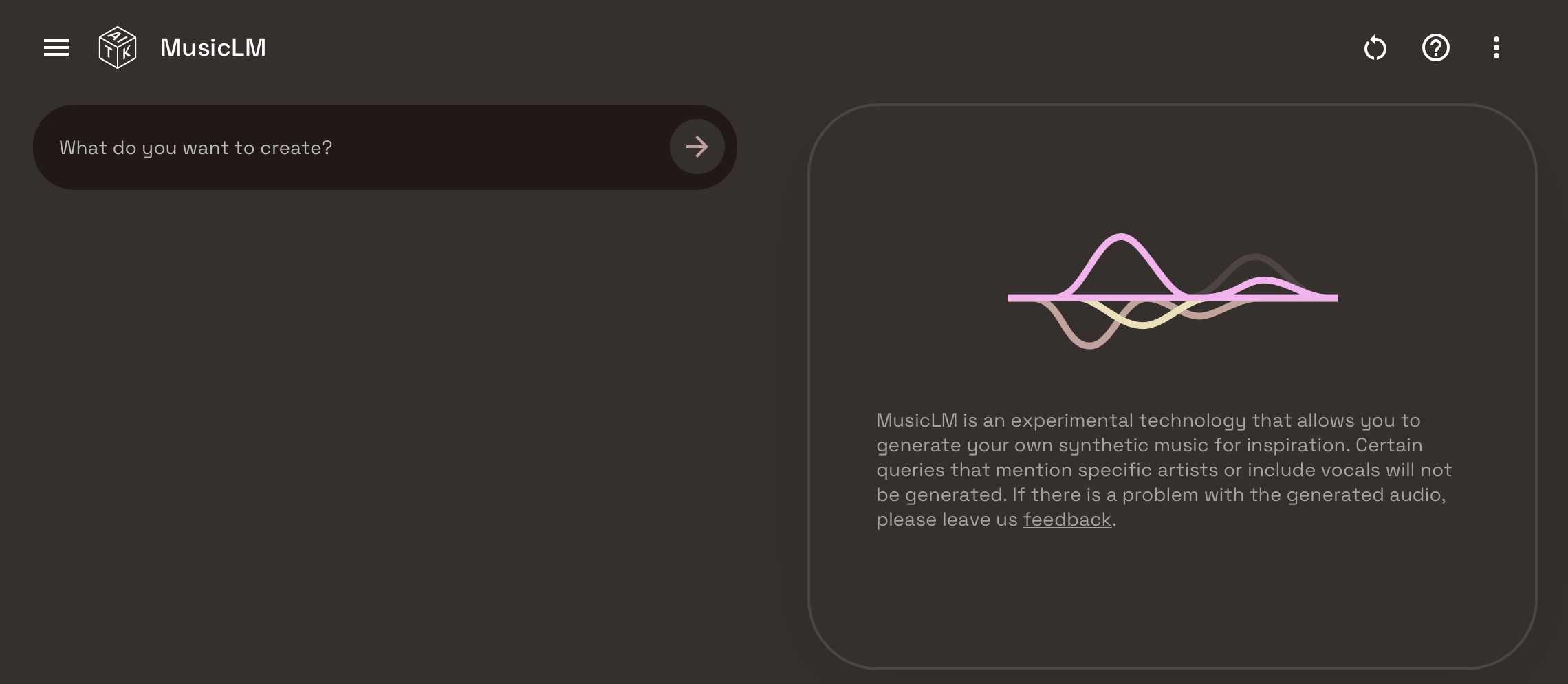

While you wait for that confirmation email, we're going to go through the steps needed to generate your song. Once you're no longer in the waitlist and approved to test the model, you can reload the page or load the website again. At this point, you should see a similar interface to the screenshot below:

It looks like ChatGPT with its single text prompt on the left, and the MusicLM player and history view on the right. We're going to try the following prompt: "Electro Disco Instrumental With Keyboard" and then we're going to hit enter.

Here's the output:

Ok, that's impressive! I know what it takes to arrange a nice melody with all instruments playing together in sync as well as making sure that the patterns for each instrument do not interfere with the overall sound. If a producer was to make this using FL Studio, it'll take at least 10 to 15 minutes to put together (if not more), this was generated instantly using MusicLM.

Download the Song

You can download the music after it was generated by the model. I highly recommend downloading and saving the song locally so it is not lost. You can do so by clicking on the three dots and then 'Download' as shown below:

Thoughts on MusicLM

In the short term at least, and with a couple of iterations and improvements to the model, I can easily see most Podcasters, YouTubers, and content creators overall using this (or similar) models to generate intro or background music for their work. It's just simply very easy, and convenient.

This is a Big Deal for Music Production

Well for one, this is definitely a big deal for music production and the future of how compositions are made. Let's take one simple use case as an example of how it could be implemented in the real world.

The New Adobe Photoshop

If you've been following AI news lately, there are a lot of posts about the new Photoshop and how it can generate and complete photos by just describing what to fill the selected spaces with, and like magic, the ML model will generate the pixels needed to modify or complete a photo using a text input. This is being praised by a lot of graphic designers as it is making their editing jobs much easier.

Image-Line FL Studio

For music producers out there, the same can be applied to production tools such as FL Studio and others. You can simply be working on a piano sample and then ask the model to complete the melody by describing how it should sound. Or simply asking the model to generate a guitar loop that works well with your existing melody. I'm pretty sure we're going to see similar integrations really soon.

At the same time, the evolution of these generative AI models and tools could be destructive for many industries if not properly implemented, used, and regulated.

Conclusion

Today we've seen a very interesting beta product by Google Labs, MusicLM. A model that is able to take a single line of descriptive text, and output a complex real sounding song matching the text description. It's only a matter of time until these models are able to generate complete soundtracks that will be used in large productions as well as many other applications.

I hope you sign up for the waitlist and try it yourself very soon and while you wait for approval, I suggest you take a look at this post if you enjoyed this article.

Thanks for reading!