Introduction to Augmenting LLMs with Private Data using LlamaIndex

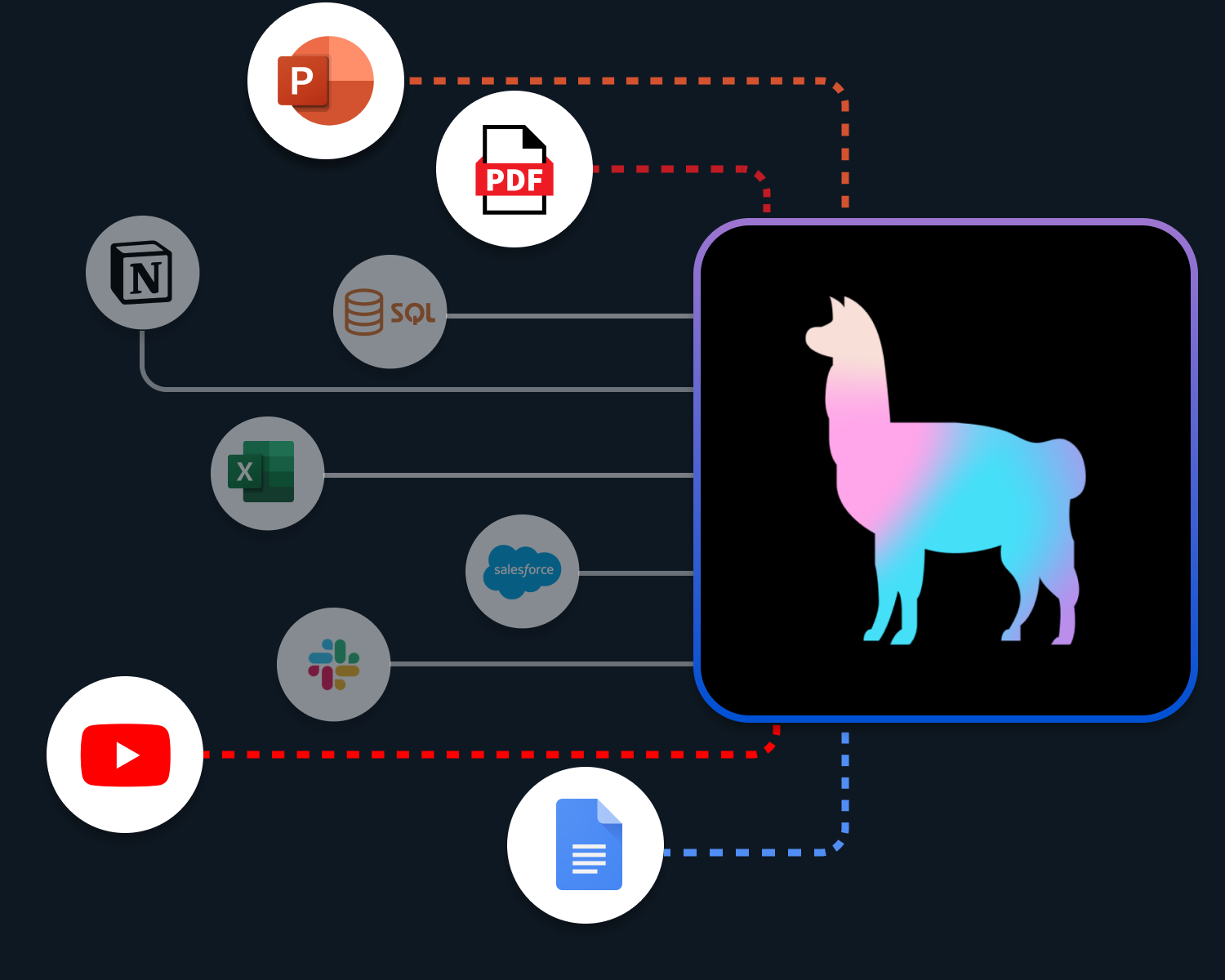

In this post, we're going to take a top-level overview of how LlamaIndex bridges AI and private custom data from multiple sources (APIs, PDF, and more), enabling powerful applications.

Introduction

LlamaIndex, formerly known as GPT Index, introduces a brilliant solution to boost language-based AI systems like LLMs (Large Language Models). These models are great at understanding and generating human-like text, thanks to the extensive training they underwent (think of ChatGPT). However, a big drawback is that they lack access to private or specialized information, which might be locked away in different applications or file formats.

LlamaIndex provides LLMs (such as ChatGPT) with the ability to understand not just public knowledge, but also your organization specific data stored in multiple places such as databases, APIs, PDFs, and others.

Overview of LlamaIndex (formally GPT-Index)

Large Language Models (or LLMs for short) come pre-loaded with large and rich datasets from online and offline public domains such as Wikipedia, mailing lists, source codes, and more.

While the information from such sources is invaluable in training LLMs, it comes with little to no use when applied to private or niche-specific data. Such data could be stored behind APIs, PowerPoint decks, SQL databases, PDF files, and more.

LlamaIndex works by providing the following tools:

- Data connectors: Connects to your private data from different sources such as APIs, PDFs, SQL, and more.

- Data indexes: Structures your data in a way that allows quick retrieval of relevant context to support a query sent to an LLM.

- Engines: Provides natural language access to your data to support a chat-style interaction with the data or a query-based input.

- Data agents: LLM-powered knowledge workers augmented by tools, from simple helper functions to API integrations and more.

- Application integrations tie LlamaIndex back into the rest of your ecosystem. This could be LangChain, Flask, Docker, ChatGPT, or something else.

LlamaIndex Use Cases

We're going to briefly go over two common use-cases for LlamaIndex so you can get a general idea of how you could potentially integrate LlamaIndex for your business.

Knowledge Agents

A Knowledge Agent is a type of agent that undergoes specialized training on custom knowledge so that it becomes an expert in specific areas. For example, data can be pulled from Knowledge Base tools like Zendesk using LlamaIndex, thereby providing the connected LLM with product-specific information.

Q&A with Documents

LlamaIndex enables you to pull information from multiple document types (such as PDF, and TXT) which could be then indexed to support document specific answers generated by the LLM. This lets you basically chat with your documents and get quick answers to any question you may have.

Conclusion

Since the introduction of ChatGPT, we've seen a boom in AI apps across the board with many companies wondering how to make use of the technology. LlamaIndex emerges as a bridge that connects the incredible language capabilities of models like LLMs with the wealth of data that organizations and individuals possess.

By making the process of ingesting, structuring, and accessing private or domain-specific data really simple, LlamaIndex enables you to create applications that not only understand human language but also engage in meaningful interactions with custom data. This basically opens us closed silos of information to the power of LLMs.

To learn more about LlamaIndex, head over to the official website and stay tuned for future posts as we'll be covering more in-depth subjects.